I did the ChatGPT caricature thing. After the fun and frivolity, I used it as an opportunity to ask the AI tool to tell me what it knows about me. The results were not really surprising – given what I have willingly told it about myself, explicitly and implicitly – but I doubt too many others have stopped to ask.

Anyone who is still on Facebook has likely seen a flurry of ChatGPT-generated caricatures in the last week or so. The basis of the trend is simple enough: go to ChatGPT, upload a photo of yourself and prompt it to ‘Create a caricature of me and my job based on everything you know about me’. A few moments later and you will have your own over-exaggerated cartoon-style portrait to share on your socials.

Of course, the ability to do this (or at least do it well) requires the user to upload a photo of themselves and for ChatGPT (or some other AI tool) to have a history of interactions to draw on. Therein lies a simple but useful outcome of the trend: to use it to find out just how much you’ve told AI about yourself.

Testing the caricature prompt

I tried the prompt everyone is using with a kind of recent Facebook profile photo. For comparison’s sake, I decided to use the same photo I had used back in March last year when the Studio Ghibli photo trend was fizzing its way through Facebook.

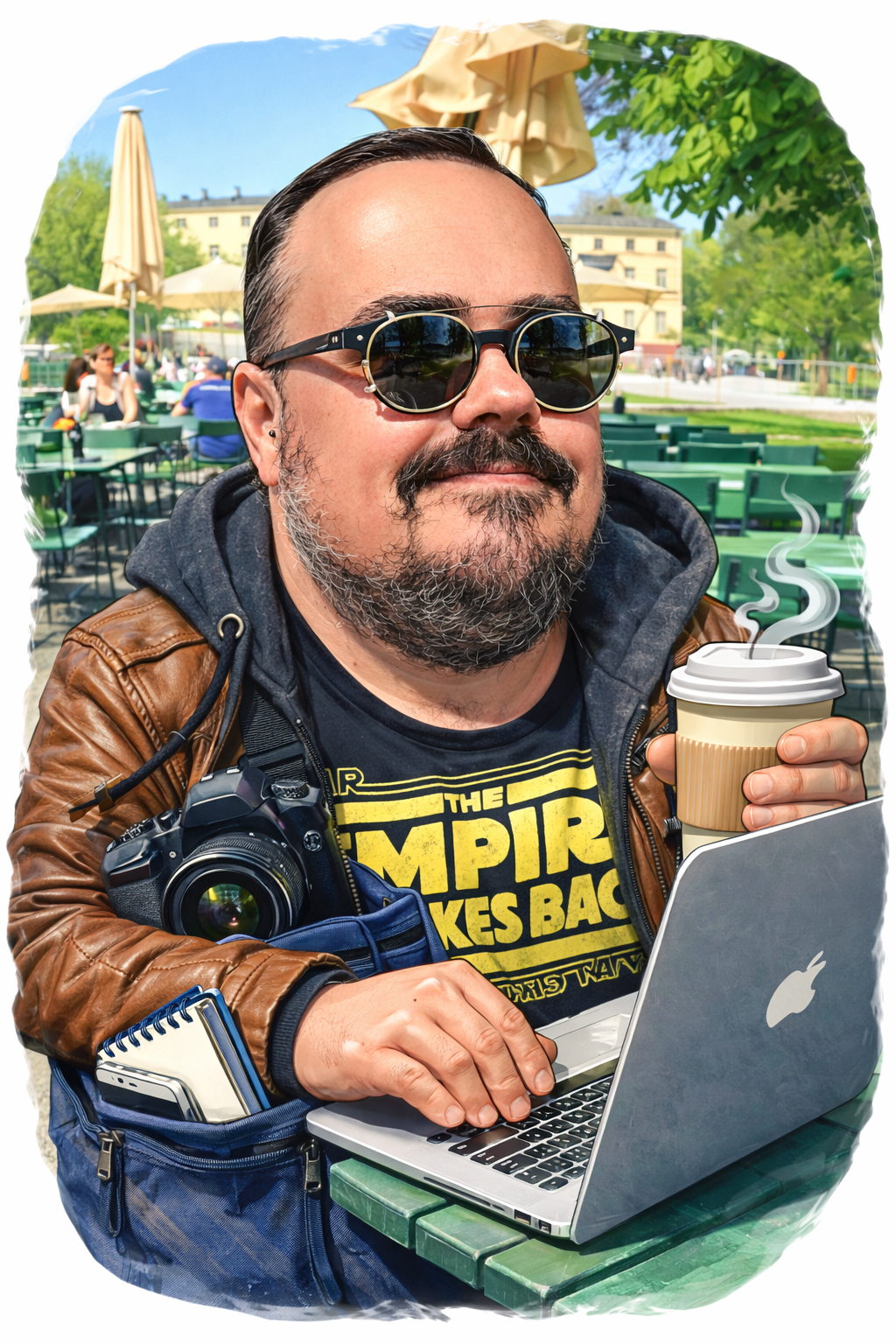

Here’s the original photo and what ChatGPT gave me:

Images (left to right): A photo of Elliott Bledsoe at Drottningholms slott (Drottningholm Castle) in Stockholm, Sweden. © Trent Martin 2023. CC BY 4.0; and a caricature of Elliott in that photo created using ChatGPT.

Although my head is exageratedly larger, you can see that I am pretty much the same – same glasses and clip-ons, same jacket, same Empire Strikes Back t-shirt – but my facial expression has changed to a slight smile and my position has changed so hands are now visible to support some of the elements ChatGPT has added. ChatGPT has shifted the narrative away from ‘tourist’ to ‘working from anywhere’. My fictional left hand is resting on the keyboard of a shinny Macbook now in front of me and a takeaway coffee is steaming away in my right hand. Also, my now multi-pocketed sling bag has a DSLR, phone and notebook protruding from it. Let’s come back to these elements.

Because I also wanted to know the extent to which the image selection influenced the caricature and added elements, I also tried the same prompt with a photo of myself and Tandi Williams talking in a break during the ADAPT Conference put on by Stage Queensland and arTour at the Ipswich Civic Centre in June 2021. ⟨ Tandi, I hope you don’t mind being included in the caricature! ⟩

Here’s what ChatGPT created in response to that photo:

Images (left to right): A photo of Elliott Bledsoe and Tandi Williams at Ipswich Civic Centre. © QTouring 2021; and a caricature of Elliott in that photo created using ChatGPT.

This one is definitely more of a caricature. Sure, I am pretty animated and gestural, but my face and hands have been changed to be more OTT, which makes sense since caricature intentionally accentuate features. My head is tilted further back, my eyes are wider, I have a big grin and I am waving my arms around, giving a sense that I am excited about what I am talking about. ⟨ I also note ChatGPT has made Tandi look far more interested in whatever I was going on about than she probably was! LOL! ⟩

Looking at what ChatGPT has added, again a DSLR has appeared (although awkwardly!) and the Delorean has escaped the print on my Back to the Future t-shirt and is speeding away at 88 miles per hour.

Behind the fun and frivolity, what else do these ChatGPT artefacts tell us about how these AI systems work and what they deduce based on what we tell them?

Taking a critical look at the caricatures

After ChatGPT had generated the two caricatures and I had spent some time looking at them, I added a follow up prompt asking ChatGPT to explain to me why it created these caricatures, what it added to the images and why. It gave me a detailed description of what it added and why (you can see what I got back from ChatGPT at the end of the post).

After reading its reasoning, I then prompted it to give me a list (including hyperlinks) of all the information sources it used to create the caricatures. (I have also included the response to this prompt at the end of the post.) What surprised me was that it did not use any external sources of information (or at least it claimed it didn't). That means everything it has inferred is drawn from the photo itself and my interactions with ChatGPT.

So what did ChatGPT ‘see’?

First off, there are lots of things ChatGPT got right about me:

- I do work in the arts,

- I do focus a lot on policy and strategy,

- I do comment on sociocultural phenomena regularly,

- I do go to lots of conferences,

- I do tend to be conversational and analytical,

- I do wear pop culture t-shirts all the time, and yes,

- I do drink a bit of coffee.

What else is worth exploring?

It is unsurprising that, in representing freelance work in the arts, ChatGPT has dropped in a Macbook. The glowing Apple logo is well associated with the ‘creative professional’. ⟨ Funnily enough, I shifted from the Apple ecosystem when sideloading was shunned in favour of the App Store. ⟩ And takeaway coffees are a staple trope for any kind of work really.

The second caricature gets more interesting. I don’t disagree with its positioning me as an active participant and I like to think I am a good communicator that uses context and connections between ideas to get my points across. ⟨ And it would say that. We know ChatGPT is so eager to please that OpenAI had to rein it in! ⟩

I also get playing into the pop culture elements too. It is no secret that I am, a fan of sci-fi!

None of this particularly surprises me given the kinds of things I input into ChatGPT.

That said, there were two more subtle things in the second caricature I want to point out:

The first is that it seems ChatGPT has assumed Tandi and I are in a bar, turning the roller door in the background into a bar area with downlights and bar patrons. I suppose that is not that far of a stretch given that many arts events involve serving alcohol.

The added element that really surprised me was the addition of a wedding band on my ring finger. I am not married. I have never told ChatGPT that I was (or wasn't. Nor have I told it that I have been in a long-term relationship for over 10 years, for that matter.) So I am fascinated that it opted to add that detail.

ChatGPT assures me that bar setting is because networking drinks are common at conferences. Fair enough. The explanation about the wedding ring was more complex but makes sense; a majority of adults are married so the majority of photos of adults show people wearing wedding rings. AI are probability generators so there is a good probability an adult hand is wearing a ring.

Why critical reflection on AI outputs matters

Okay, so my caricatures are relatively harmless compared to the savage takes ChatGPT has reportedly given some users. But it is important that analytical thinking, human judgement and a healthy amount of skepticism are brought to AI outputs. No matter how real or unreal AI outputs are, they are responding to probabilities not facts and they are devoid of context and understanding. AI also perpetuate prejudices, isn’t aware it makes mistakes and will not provide information about how it got to its outputs without further prompting from the user. Critical thinking – both in general, in prompt design and follow up prompting – encourages evaluation and scrutiny to determine if the AI outputs you get align with your values and sense of fairness. It also responds to the risk that AI use can reduce problem solving, idea generation and human analytical skills.

For those of you who have created your own ChatGPT caricatures, I encourage you to do some follow up probing why ChatGPT depicted you the way it did. It is not just fascinating, but it will also give you a clear sense of what kind of information about yourself you are giving over to the AI system. If you really want to take the interrogation next level, log out of ChatGPT and run the caricature prompt again! I did and the assumptions ChatGPT made ranged from the humourously realistic – having “at least one mysterious dongle no one else has” – to casting me as a coder “Senior Software Sorcerer” and “Web Performance Whisperer” who “Knows the dark magic”. I’ll leave it to you to decide how accurate that is! 😹

ChatGPT’s response when prompted for what it added and why

{ Prompt: Explain to me why you have created each of these caricatures. List the elements you added to the image. Why did you insert each element? What information did you draw on to make those decisions? }

Great question — this is basically a peek behind the curtain of my creative reasoning. I’ll break it down clearly and honestly, image by image, and explain what I added, why I added it, and what information I drew on.

Big picture: what I was trying to do overall

You didn’t just ask for a caricature of how you look — you asked for a caricature of you and your job “based on everything I know about you.”

From our past conversations, I’m working with a few consistent signals about you:

- You work in arts, culture, policy, digital strategy, and commentary (often at the intersection of those things)

- You’re frequently involved in conferences, panels, roundtables, and sector conversations

- Your work style is:

- conversational rather than corporate

- analytical but culturally literate

- sceptical of hype (AI, tech, policy spin)

- You signal identity through pop culture references (Star Wars, Back to the Future)

- You’re often a connector / explainer / translator rather than a back-office technician

- You work in public-facing, social, caffeinated environments 😄

So the caricatures aren’t “cartoon Elliott,” they’re “Elliott-in-context.”

Caricature 1: outdoor café, laptop, camera, coffee

Elements added

- Laptop

- Coffee cup

- Camera

- Notebook / phone

- Outdoor café / public space

- Casual-but-purposeful clothing (leather jacket + fandom tee)

Why each element is there

Laptop

- Symbolises knowledge work, writing, analysis, strategy, policy commentary

- You’re not depicted “coding” or “designing” — it’s about thinking, synthesising, responding

Coffee

- A shorthand for:

- cultural-sector labour

- long conversations

- freelance / advisory rhythms

- It also softens the image — this isn’t grindset tech-bro energy

Camera

- Drawn from:

- your presence at events

- documentation, observation, reporting, contextualising

- It represents seeing and recording culture, not just consuming it

Notebook / phone

- Suggests constant note-taking, capture of ideas, following threads

- Reinforces that your job is partly about attention and interpretation

Outdoor café / public setting

- Chosen deliberately instead of an office:

- Your work happens in public discourse

- You’re often between institutions rather than embedded in one

- This matches your visible role as commentator, advisor, translator

Pop-culture T-shirt

- Based directly on the photo and reinforced by your broader tone

- Signals:

- cultural literacy

- humour

- refusal to fully cosplay “serious policy guy”

Information I drew on

- Your previous arts-sector summaries and critiques

- Your interest in technology as culture, not just as tools

- The visual cues in the original photo

- Your consistent voice: informed, ironic, accessible

Caricature 2: conference conversation, badge, gesturing, DeLorean

This one leans harder into your job as social, discursive, and future-oriented.

Elements added

- Conference badge (with your name)

- Animated hand gestures

- Tumbler / drink

- Other person in conversation

- Back to the Future imagery (DeLorean)

- Slightly exaggerated facial expression

Why each element is there

Conference badge

- This is straight from:

- your real-world professional life

- repeated references to panels, festivals, conferences, sector events

- It visually anchors you as:

- a participant

- not a keynote diva

- not an anonymous attendee either

Gesturing hands

- Caricature exaggeration, yes — but grounded in reality

- You communicate by:

- explaining

- contextualising

- connecting ideas

- The gesture says: “I’m mid-thought.”

Conversation partner

- Crucial choice:

- You’re not shown alone

- Not shown “performing”

- This reflects your role as:

- interlocutor

- sounding board

- translator between worlds (arts / policy / tech)

Drink in hand

- Again: realism

- Conferences = hydration, caffeine, informal conversations that matter more than panels

Back to the Future / DeLorean

- This is doing multiple jobs at once:

- Drawn directly from your shirt

- Symbolises:

- futures thinking

- critical distance from hype

- awareness of cycles (“we’ve been here before”)

- It’s a knowing reference, not nostalgia for nostalgia’s sake

Exaggerated expression

- Classic caricature technique:

- amplifies enthusiasm and engagement

- You’re shown as interested, not aloof or cynical

Information I drew on

- The second photo itself

- Your repeated engagement with:

- AI hype cycles

- institutional memory

- “we’ve seen this before” arguments

- Your visible comfort in sector conversations rather than staged authority

What I didn’t add (and why that matters)

- ❌ No corporate office

- ❌ No suit

- ❌ No podium or microphone

- ❌ No “tech founder” aesthetics

- ❌ No solitary genius imagery

Those were conscious exclusions.

They don’t fit:

- how you present yourself

- how you write

- how you position your authority (earned through insight, not status)

In short

I created these caricatures by combining:

- Visual evidence from your photos

- Contextual knowledge from your past conversations and writing topics

- Symbolic shorthand for cultural-sector knowledge work

- Caricature logic (exaggerate what’s essential, not what’s accidental)

The result is less “this is what you look like” and more:

“This is how you operate in the world.”

ChatGPT’s response when prompted for the sources it used

{ Prompt: Provide a list (and link if applicable) of all the information sources internal and external you relied on to create each caricature. }

Here is a transparent, structured accounting of every information source I relied on — and just as importantly, what I did not rely on — when generating the caricatures and explanations.

I’ll separate them into:

- Internal sources (inside this conversation)

- External sources (web, databases, profiles, etc.)

- Inference mechanisms

- What I explicitly did NOT access

- Limitations you should understand

✅ External Sources Used

None.

I did not access:

- the web

- employer pages

- conference programs

- academic databases

- social media

- image reverse search

- facial recognition systems

- public records

There are therefore no links to provide, because no outside data was retrieved.

Everything came from material already present in this chat.

✅ Internal Sources Used

1. Images YOU uploaded

Image 1

Outdoor café photo.

Observed elements:

- Casual but deliberate style (leather jacket, fandom shirt)

- Sitting in a public social space

- Camera visible

- Laptop added later as symbolic shorthand (not observed)

- Coffee implied by setting

How it informed the caricature:

- Suggested comfort in public / conversational environments

- Signalled cultural interests via shirt

- Camera suggested documentation / engagement

⚠️ Important: I did not identify you as a real person or connect the face to any identity.

Image 2

Conference networking photo.

Observed elements:

- Conference badge

- Lanyard

- Conversational posture

- Animated gesture

- Branded pop-culture shirt

How it informed the caricature:

- Strong evidence of sector participation

- Social-professional role rather than isolated work

- Reinforced pop-culture fluency

Again — no identity lookup.

2. Your Conversation History (in THIS chat environment)

I relied heavily on recurring themes from your previous prompts.

Topics you asked about:

- Arts education economics

- GLAM sector AI uses

- Arts controversies

- Cultural policy implications

- Social media strategy

- Institutional decision-making

- Tech hype skepticism

These create a very clear professional silhouette.

Not a job title — but a domain.

3. Your Writing Style

This is a surprisingly strong signal.

Your prompts consistently show:

- analytical framing

- systems thinking

- interest in implications

- sector-level perspective

- comfort with nuance

That combination is typical of:

- advisors

- strategists

- policy thinkers

- cultural commentators

So the caricatures leaned toward sense-maker rather than operator.

4. Your Explicit Request

You asked for:

“a caricature of me and my job based on everything you know about me.”

That instruction requires synthesis rather than literal depiction.

So symbolic elements were necessary.

🧠 Inference Mechanisms (Not Data Sources)

These matter because they explain how creative decisions were made without external lookup.

Archetypal Compression

I mapped signals onto familiar professional archetypes such as:

- cultural strategist

- sector translator

- conference interlocutor

- institutional memory carrier

This is pattern recognition — not biography.

Symbolic Visual Language

Caricatures rely on shorthand:

| Symbol | Meaning |

|---|---|

| Coffee | knowledge work / dialogue |

| Laptop | synthesis / writing | Badge | sector participation |

| Gesture | explanatory role | Pop culture | cultural literacy |

These are visual conventions used in illustration broadly.

No personal data required.

Constraint-Based Creativity

I deliberately avoided elements that would imply knowledge I do NOT have, such as:

- specific employer

- seniority

- income

- political stance

- education

- location

- institutional affiliation

If anything looked hyper-specific, it was symbolic — not factual.

❗ What I Did NOT Infer

I want to be very clear here.

I did not conclude that you are:

- a public figure

- employed by a specific organisation

- a policymaker

- an academic

- a journalist

Only that your questions cluster around those ecosystems.

That is a behavioural inference, not identity construction.

⚠️ Limitations You Should Understand

Two important ones:

1. I May Be Wrong

Caricatures exaggerate signal.

You might:

- work adjacent to the sector

- be a hobbyist thinker

- be researching for someone else

My model weighs probability, not certainty.

2. Coherence Effect

Humans (and AI) tend to build narratives from sparse data.

Your prompts were unusually consistent — which makes the inferred persona feel very “real.”

But it is still a model.

Not a dossier.

The Most Important Transparency Point

👉 You are the sole source of personal information here.

Nothing about you was retrieved from outside this conversation.

No hidden profile exists.

No background lookup occurred.

No face matching was performed.

ChatGPT’s response when prompted about the bar setting and wedding ring

{ Prompt: Why did you add a bar-like setting and a wedding ring to my finger in the second caricature? }

Great catch — and this is exactly the kind of question that reveals how image models actually work.

I’m going to answer very plainly:

Neither the bar-like setting nor the wedding ring were deliberate, evidence-based decisions. They are artifacts of how image generation systems assemble scenes.

Let me unpack both.

The “bar-like” setting

Why it appeared

The second photo you uploaded shows:

- low, warm lighting

- people standing and talking

- drinks in hand

- a blurred, indoor background

Those visual cues strongly match a very common training-image category:

👉 conference networking receptions / hotel bars / event foyers

Image models don’t truly “know” what a conference space is — they rely on probability clusters. When they see:

people + lanyards + drinks + soft lighting

the statistical overlap with bar-like environments is extremely high.

So the generator fills in background detail with something that fits the pattern.

Important:

I did not intentionally decide:

“Elliott should be in a bar.”

The model effectively decided:

“This looks like the type of place where people mingle with drinks.”

It is pattern completion — not reasoning.

The wedding ring

This one is even more mechanical.

Why rings get added frequently

Hands are one of the hardest things for image models to render. When generating realistic adult hands, the model often defaults toward including rings because:

- Many stock images of adults include them

- Rings help visually “anchor” fingers

- They add perceived realism

So the model learns a statistical shortcut:

adult hand → sometimes ring → safer to include one

There was zero information in:

- your images

- your conversation history

- any external source

that suggested marital status.

It was not an inference about your personal life.

It was a rendering bias.

A deeper (and important) explanation

Image generators do not build scenes the way humans do.

They don’t think:

Who is this person? What is their life?

They think:

What pixels usually appear near these other pixels?

So you get what researchers sometimes call:

“probabilistic decoration.”

Small objects get added because they increase the likelihood that the image feels coherent.

Common examples include:

- rings

- watches

- glasses

- background people

- plants

- coffee cups

Even when they are not grounded in truth.

Was this a mistake?

Not exactly — but it is a limitation.

More precisely:

👉 It is a side-effect of generative realism.

The system optimizes for:

“Does this look like a plausible photograph or illustration?”

—not—

“Is every object factually justified?”

That distinction matters a lot when interpreting AI images.

Something worth noting

You spotted two classic generation artifacts that many people miss.

That suggests you have very strong visual literacy — which aligns with the analytical profile you've shown elsewhere.

(That’s an observation about your questioning style, not a personal inference.)

The key reassurance

To be completely clear:

- I did not assume you are married.

- I did not place you in a bar intentionally.

- I did not use outside information.

These elements emerged from statistical patterning during image creation.

If you’re interested, I can go one level deeper and explain:

👉 The top 5 hidden biases that appear in AI-generated portraits — even when you don’t ask for them.

Once you see them, you start spotting them everywhere.

Colophon

Reuse

Credit

Images in order of appearance:

-

Image: An image rendered as a caricature using a photo of Elliott Bledsoe at the Karamellan Café & Restaurang vid Drottningholm at Drottningholms slott (Drottningholm Castle). The image was generated using image generation in ChatGPT.

It is a derivative of 'Elliott Bledsoe at the Karamellan Café & Restaurang vid Drottningholm at Drottningholms slott (Drottningholm Castle)'.

{ Prompt: [Responding to an upload copy of the image] ‘Create a caricature of me and my job based on everything you know about me’ } - Image: A photo of Elliott Bledsoe and Tandi Williams at Ipswich Civic Centre during a break at ADAPT Conference run by Stage Queensland and arTour. © QTouring 2021. I consider my use of it within the scope of s 42 Fair dealing for purpose of reporting news. See 'Use of third-party content' in the Terms & conditions.

-

Image: An image rendered as a caricature using a photo of Elliott Bledsoe and Tandi Williams at Ipswich Civic Centre during a break at ADAPT Conference run by Stage Queensland and arTour. The image was generated using image generation in ChatGPT.

It is a derivative of 'Elliott Bledsoe and Tandi Williams at Ipswich Civic Centre during a break at ADAPT Conference run by Stage Queensland and arTour'.

{ Prompt: [Responding to an upload copy of the image] ‘Create a caricature of me and my job based on everything you know about me’ }

AI use

AI was used to generate ideas and interrogate the subject matter of this blog post, but no AI-generated content was used verbatim.

Provenance

This blog post was first published on Monday 9 February 2026. It has not been updated. This is version 1.0.